Have you ever received a phone call from someone claiming to be a loved one, urgently requesting money or assistance? Perhaps something about the voice felt slightly off, yet familiar enough to trigger concern. You’re not alone and you may have encountered the alarming rise of AI voice clone scams.

These scams leverage artificial intelligence to generate voice replicas so realistic that they’re nearly indistinguishable from real speech. Cybercriminals are exploiting this technology to impersonate family members, coworkers, or authority figures with shocking accuracy. This emerging scam method is fast becoming one of the most insidious tools in the fraudster’s arsenal.

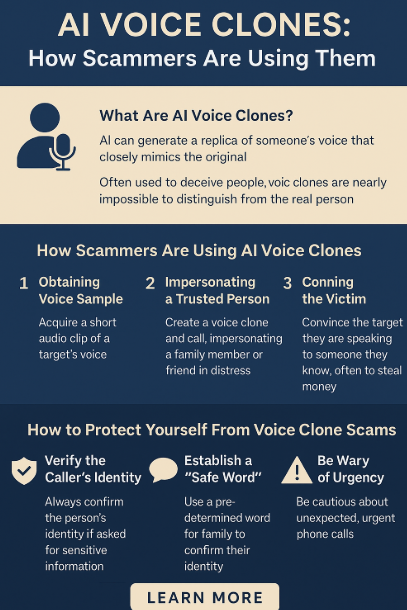

What Are AI Voice Clones?

AI voice cloning refers to the use of machine learning models particularly generative deep learning and speech synthesis algorithms to replicate a person’s voice. These systems analyze short voice recordings and reproduce tone, cadence, accent, and other vocal markers to produce a synthetic version that mimics the original speaker.

While this technology holds promise in entertainment, accessibility, and virtual assistant development, it has also opened the door to dangerous misuse in fraud.

How Scammers Use AI Voice Clones

Here’s a step-by-step look at how scammers typically exploit this technology:

-

Voice Sample Collection

Scammers acquire short samples of a person’s voice from social media, voicemail, YouTube, or recorded calls. -

Voice Cloning Using AI Algorithms

With as little as 10 seconds of audio, deep learning models can generate a synthetic voice clone that sounds nearly identical to the original. -

Execution of the Scam

The attacker impersonates a family member or trusted contact in distress—claiming they need money urgently, often for bail, medical emergencies, or sudden travel problems. -

Emotional Manipulation

Victims, caught off guard by hearing a familiar voice, often act without verifying the identity of the caller, leading to financial loss or data compromise.

Real-Life Cases of AI Voice Clone Scams

These aren’t hypothetical scenarios they’re happening right now. In several reported cases across the U.S. and Europe:

-

Parents received calls from their “children” claiming they had been arrested or kidnapped and needed bail or ransom money.

-

Executives were impersonated to authorize wire transfers or reveal sensitive business information.

In many of these cases, the cloned voices were virtually indistinguishable from the real individuals, making it nearly impossible to spot the deception in time.

How to Protect Yourself from AI Voice Scams

As this technology becomes more accessible, it’s essential to implement defensive habits that can protect you and your loved ones. Here are some best practices:

1. Always Verify Identity

If you receive a request involving money, personal data, or sensitive actions, pause. Call the person back using a trusted number to confirm their identity. Do not respond to unknown or unexpected calls with urgency, especially if the situation seems emotionally charged.

2. Use Secret Codes or Safe Words

Establish a family code phrase or safe word system. If someone calls claiming to be a relative in distress, ask for the code. This is an easy and effective way to filter out imposters.

3. Be Wary of Urgent Requests

Scammers thrive on urgency. If the call pressures you to act immediately, especially when combined with emotional appeals, be cautious. This is a key red flag in synthetic voice fraud.

4. Raise Awareness Among Family and Friends

The more people are educated about AI voice scams, the fewer successful attacks occur. Discuss this threat with elderly relatives or anyone not digitally literate.

What This Means for You

The ability to clone voices is not science fiction it’s real and readily available. While tech-savvy scammers are using it to orchestrate increasingly sophisticated frauds, knowledge and vigilance can significantly reduce your risk of falling victim.

By adopting preventive measures like verifying requests, limiting your voice footprint online, and keeping family aware of such scams, you can better safeguard against these emerging threats.

The Future of AI Voice Technology and Ethical Implications

Voice cloning is a double-edged sword. In legitimate use cases, it enables remarkable innovation in accessibility and media. However, the darker applications highlight the urgent need for ethics-driven development and regulation.

Tech platforms and policymakers must address how voice cloning data is sourced, stored, and used. Meanwhile, end users must exercise caution and treat unexpected voice-based requests with scrutiny regardless of how familiar the caller may sound.

Final Thoughts

AI voice clone scams represent one of the most pressing cybersecurity threats in the age of generative AI. As attackers grow more sophisticated, so must our defenses. Staying informed is your first line of protection.

Have you encountered a suspicious voice call recently? Share your experience or thoughts in the comments below and let others learn from your story.